In the iOS app we are working on, we found it fun to insert Emoji characters in the communication between people’s iPhones. However, as we scaled the app up to pass more information in a database, as well as using Urban Airship for push notifications, I we lost the ability to do Emoji characters. I wasn’t too concerned about it, since we were planning some architectural changes in the future, and so I was hoping the issue would resolve itself if we someone introduced components that better supported UTF-8. Over the last couple of days, I was curious as to why it was failing and had quite a ride figuring out both the number of layers that UTF-8 support needs to be in, as well how some things can support some UTF-8 characters and not others. This is their story.

First, a little bit about the architecture. Currently, the backend is a RESTful API written in ruby using Sinatra. We current use ActiveRecord to abstract the connection to the MySQL database, and the connections to the DB are all made via the mysql gem. After first hitting the issues, “the google” suggested that it was the mysql ruby gem that needed to be updated to support UTF-8. I had issues updated to the mysql2 gem for ruby on our Amazon AWS servers, and promptly gave up since we aren’t going to be using MySQL for deployment. However, I did notice that I couldn’t store Emoji characters directly in the MySQL table. Something was fishy.

It turns out that MySQL DB had partial support for UTF-8 characters. In order to understand the reason, you have to understand UTF-8 a bit better. UTF-8 is meant to be backward compatible with ASCII 8 bit encodings. So the first byte (0-255) of characters in UTF-8 are the same as ASCII. So if you send a email message encoded as UTF-8 to someone that only has ACII support, they can read it fine if they only have ASCII supported reader. However, as you add more bytes to UTF-8, you get more available characters. So a UTF-8 character can be 1, 2, or 3 bytes long depending on the characters that needs to be represented.

So the first step I took was to verify that the database, tables, and columns were UTF-8 supported. I had the encodings of the ActiveRecord connection set to UTF-8:

ActiveRecord::Base.establish_connection(

:adapter=>‘mysql’,

:host=>’127.0.0.1’,

:database=>’some_db’,

:username=>’**’,

:password=>’*******’,

:pool=>8,

:encoding=>’utf8’)

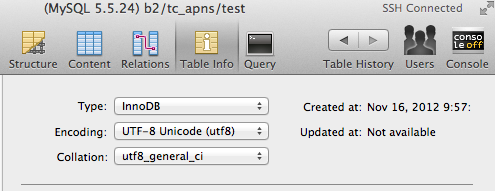

The table was set to Latin based encoding, so that need to be changed as well:

So now I was pleased with myself. I could insert some Emoji characters into the DB. So I fired up the character palette and inserted an Emoji: ?.

#FAIL

So now I dropped down to try and create the simplest situation possible. I tried modifying the my.cnf file to support UTF-8, used the mysql command line, and everything else under the sun. Didn’t work . I began to think that I had somehow done something wrong to MySQL in the past, and now it was getting even. So I slept on it and swore to mend my ways and try to do good deeds to bring up my karma ratings.

I then discovered that MySQL only had PARTIAL support for UTF-8 characters, and there is full support in the UTF8mb4 setting. So now I headed back to MySQL, and quite pleased with myself, set it to UTF8mb4, and inserted a smiley face into MySQL, and it showed up!

I broke out the champagne, grabbed my wife’s slipper, took the lampshade from the living room, and headed out to celebrate. Just a last test, I checked out app to make sure I could see the Emoji characters, and again I was confronted by crapped empty boxes that meant that UTF-8 support was still broken. The champagne went back in the fridge, slipper back in the closet, and lampshade off my head and back on the lamp. I needed to dig deeper.

So I fired up the IRB command line interpreter for Ruby, and pasted in all the class definitions for the DB. Then I tried to add and query Emoji characters in the DB. The characters that were in the DB were returned fine. I also tried to populate the DB via ActiveRecord, and the only way I could get Emoji characters in the DB was via the slash notation “u2122”. But when I tried to use a straight unicode character, such as “?”, it would fail. So I fought with it for a while, and decided that the Ruby strings were encoded incorrectly, and attempted to use straight ASCII strings with slash encoded (“u2122”) unicode characters. This was painful, and the user can add in any character, and I would have to pull out the unicode characters and escape them. I got it done, and before head back to the champagne, tried it again and it STILL FAILED.

#sadface

The then decided to sleep. Sleep is good. Sleep helps. When I woke up, I took another run at it. How is it different? What could possible be the difference between slash notation in ASCII and UTF-8 encoded then turned in ASCII slash notation? Then I tried all my tests again. And this time, instead of using a random unicode code (“u2430”), I figured out the Emoji character that was failing and the code associated with it. I was using the closed eyed smily, and the unicode code as something like “u1F3212”. When I converted that to slash notation, I got back “uF3212”. Wait a minute! I lost a digit! So then I tried the ones that were in the DB that worked, and it turns out they were 2 byte Emoji characters. When I tried those, our app worked fine with UTF-8 characters. So something was messaged up with 3 byte UTF-8 characters.

Digging a bit more, I discovered that the JSON gem has issues with 3 byte UTF-8 characters. I found this posting on stack overflow to work around it:

Adding in:

if defined?(ActiveSupport::JSON)

[Object, Array, FalseClass, Float, Hash, Integer, NilClass, String, TrueClass].each do |klass|

klass.class_eval do

def to_json(*args)

super(args)

end

def as_json(*args)

super(args)

end

end

end

end

resolved the issue.

So to recap, the issue had many layers. MySQL, ActiveRecord, mysql gem, and JSON.